~ 4 min

Lifescope Project

An AI software looking for solutions to healthcare problems

Some history

At some point in March 2017, my friend Juan Fernández proposed me to join him in the development of a software he’s been working on as a side project. It was a script written in Python that used spaCy to analyze messages coming from a twitter stream.

The idea was to use the Natural Language Processing (NLP) engine of spaCy to find messages in English where a solution is proposed to a healthcare problem, tagging both problem and solution, and discarding irrelevant messages.

The script also analyzed the author of each message, tagging it automatically (e.g.: doctor, news source, patient…).

He had a first version of the script working on his computer, but he wanted to find a way to show it to the world. So I thought we could turn it into an online web application accessible for everyone and make everything Open Source. Now, the first version of our software is available online at https://www.lifescope-project.com.

Architecture

From local script to the cloud

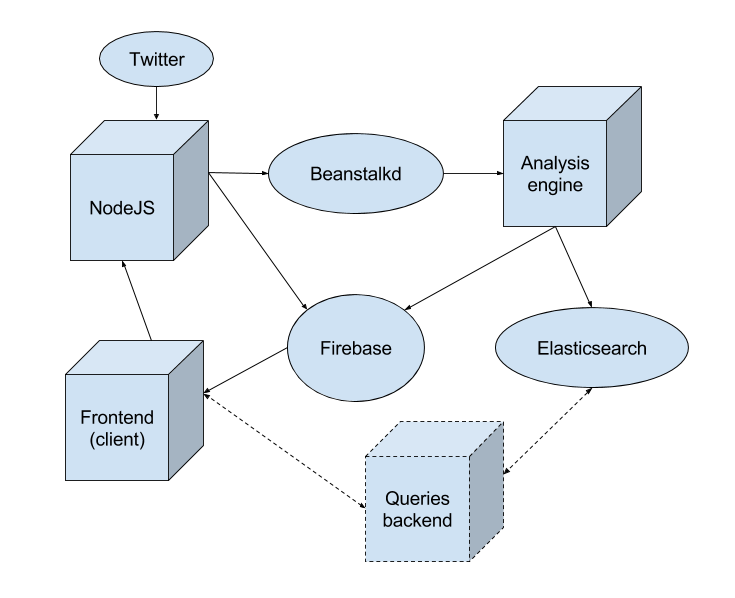

After some playing around with Flask, I decided that separating the analyzer from the twitter stream and the backend services was the best solution. We wanted a system that scales and that is easily maintainable. So I came about with the following microservices based architecture:

- A nodeJS service, sending messages from the twitter stream to a beanstalkd jobs queue.

- The python analysis engine, which now just takes jobs from beanstalkd and sends the results to Firebase and Elasticsearch.

- Firebase DB, serving the analyzed messages in real time to the frontend.

- A ReactJS + Redux based frontend, that works as a showcase for the project, with an introduction, a link to the project’s blog, and a real time timeline of analyzed messages.

Features

With this architecture, we can show the results of the analysis in real time, while they are also saved permanently into the Elasticsearch in order to ease the integration with an upcoming statistics module. Firebase takes care of the websockets; so, our services just need to respond to on-demand requests, making scalability less demanding. We clean the Firebase DB from older messages every three hours, so that it loads quickly. Thus, the free plan with 2GB of storage is more than enough for our needs.

The analysis engine is now independent of the source: it just processes jobs that come from beanstalkd with a generic JSON format. We can (and we will) extend the streaming services in order to provide more sources, other than twitter (e.g.: specialized news feeds).

The nodeJS service also has an endpoint to which the frontend can post new messages, so that our visitors can test the analysis engine and see the results right away on the timeline.

Deployment

Everything is dockerized, making the installation and deployment of the whole system a piece of cake, allowing us to easily move our services if the demand grows. We deployed it to a VPS and it’s working 24/7, creating an interesting database to analyze using Big Data tools.

And why did you…

- Use React and Redux for such a simple frontend? Side projects are for me a chance to experiment with technologies I’m still no expert with. I started writing it with Angular, but since I already had other active projects that used Angular, I decided to rewrite it using React and Redux, because I like to use the time I spend on my side projects to learn new interesting stuff I don’t always use on my day job. Maybe just React would’ve been enough, but anyway, if the frontend grows into something more complex (which is quite likely), its scalability will be easier.

- Use a VPS instead of deploying it to AWS? A VPS is really cheap, and for this first phase is more than enough for our needs. Also, we’re not afraid to maintain ourselves a couple of Linux machines :)

Further steps

Now it’s time to take advantage of the information saved in the Elasticsearch. The next step is to build a new backend service (most likely, Clojure based) that provides the frontend with results of a Big Data analysis. D3js will for certain be a useful tool in order to plot that data on the frontend.

Do you have any questions or remarks? Feel free to ask us anything on the comments section.